Automate Docker Deployment To EC2 Using Bitbucket Pipelines And ECR

Introduction

In this tutorial, we’ll explore how to automate the deployment of Dockerized applications to Amazon Elastic Compute Cloud (EC2) instances using Bitbucket Pipelines and Amazon Elastic Container Registry (ECR). This approach enables continuous integration and continuous deployment (CI/CD) of containerized applications, ensuring faster and more reliable deployments.

Prerequisites

- A Bitbucket account with a repository containing your Dockerized application

- An Amazon Web Services (AWS) account with EC2 and ECR services enabled

- Docker installed on your local machine

- Basic knowledge of Docker, Bitbucket Pipelines, and AWS services

Setting Up EC2 Instances

- Log in to your AWS Management Console using your AWS account credentials.

- In the AWS Management Console, navigate to the EC2 service by clicking on the “EC2” tab in the top-level menu.

- Click on “Launch Instance” in the EC2 dashboard and select a suitable Amazon Machine Image (AMI) for our application. We can choose from a variety of AMIs provided by AWS or use a custom AMI.

- Configure the instance details, including:

- Instance type: Choose an instance type that meets your application’s requirements.

- Security groups: Select Create security group. Checked the “Allow HTTPS traffic from the Internet” and “Allow HTTP traffic from the Internet”

- Key pair: Select Create a new one.

- Review the instance details and launch the instance.

Create an ECR Repository

- Click on “Create repository” and provide a name for your repository.

- Note the repository URL, which will be used later in the tutorial.

Configuring IAM Roles

To allow EC2 instances to access ECR, we need to configure an IAM role with the necessary permissions.

- Navigate to the IAM service in the AWS Management Console.

- Select “Roles” and click on “Create role” to create a new IAM role.

- Choose the service that will utilize this role, which is EC2 in this case.

- In the “Attach permissions policies” step, search for and select the

AmazonEC2ContainerRegistryReadOnlypolicy. This policy grants read-only access to ECR. - Follow through the remaining steps, providing a name for the role and reviewing details.

- Click on “Create role” to establish the IAM role.

Assign IAM Role to EC2 Instance

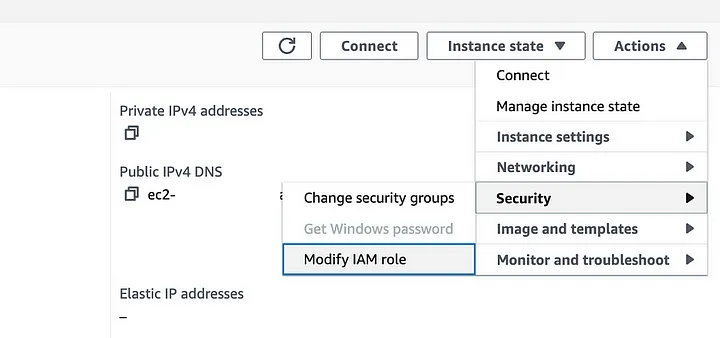

- Return to the EC2 page and select the previously created EC2 instance.

- Navigate to Actions > Security > Modify IAM role.

- Choose the IAM role created in the previous step.

- Click on “Update IAM role” to grant the EC2 instance permission to access ECR and pull the Docker image.

Build and Push Docker Images to ECR

Create a new file named Dockerfile in our Django project root directory with the following content:

# Use an official Python runtime as a parent image

FROM python:3.9

# Set environment variables

ENV PYTHONDONTWRITEBYTECODE 1

ENV PYTHONUNBUFFERED 1

# Install PostgreSQL client tools

RUN apt-get update && \

apt-get install -y postgresql-client

# Set the working directory in the container

WORKDIR /usr/src/app

# Copy just the requirements file first to leverage Docker caching

COPY requirements.txt .

# Install dependencies

RUN pip install --upgrade pip && pip install -r requirements.txt

# Copy the rest of the application code

COPY . .

# Set the script as executable

RUN chmod +x /usr/src/app/entrypoint.sh

EXPOSE 8000This Dockerfile assumes that we have a requirements.txt file in the root of our Django project, and that our Django application is in the same directory.

Build the Docker image by running the following command in our terminal:

docker build -t my-django-app .Tag the image with the ECR repository URL:

docker tag my-django-app:latest <account_id>.dkr.ecr.<region>.amazonaws.com/<repository_name>:latestReplace <account_id>, <region>, and <repository_name> with your actual values. Authenticate Docker to our Amazon ECR registry. Run the following command:

aws ecr get-login-password --region <region> | docker login --username AWS --password-stdin <account_id>.dkr.ecr.ap-southeast-1.amazonaws.comPush the Docker image to ECR:

docker push <account_id>.dkr.ecr.<region>.amazonaws.com/<repository_name>:latest

Generate SSH Key

We need to encode our pem key in base64 format. This is necessary because Bitbucket Pipelines needs a way to access our EC2 instance in order to deploy the Docker image. By converting the key.pem file to base64 format, we can store the key as a repository variable in Bitbucket Pipelines, which can then be used to access the EC2 instance.

Convert our key.pem file to base64 format using a Python script:

import base64

sample_string = """$YOUR_PEM_KEY"""

sample_string_bytes = sample_string.encode("ascii")

base64_bytes = base64.b64encode(sample_string_bytes)

base64_string = base64_bytes.decode("ascii")

print(f"Encoded string: {base64_string}") Once we have the base64-encoded SSH key, we can configure Bitbucket Pipelines to enable the pipelines and generate known hosts for the EC2 instance. This involves accessing the Bitbucket pipeline settings and adding the SSH key as a repository variable:

We’ll also need to add known hosts for the EC2 instance by inserting the EC2 instance host address into the Host address field and clicking Fetch:

Create run.sh file

Next, we’ll create a run.sh file to pull the Docker image and initiate the Docker container. This file will be executed as part of the Bitbucket Pipelines process, and it will perform the following steps:

#!/bin/sh

cd /home/ec2-user

aws ecr get-login-password --region ap-southeast-1 | docker login --username AWS --password-stdin ${YOUR_ACCOUNT_ID}.dkr.ecr.ap-southeast-1.amazonaws.com

docker-compose down &&

docker-compose pull &&

docker-compose up -dThe run.sh file assumes that we have a docker-compose.yml file in our repository that defines the services we want to deploy. In this case, the docker-compose.yml file defines three services: db, api and nginx:

version: '3'

services:

db:

image: postgres:latest

environment:

POSTGRES_DB: django_api_db

POSTGRES_USER: postgres

POSTGRES_PASSWORD: 123456!

ports:

- "5433:5432"

volumes:

- pgdata:/var/lib/postgresql/data/

networks:

- mynetwork

api:

container_name: api

image: ${ECR_URL}:latest

entrypoint: /usr/src/app/entrypoint.sh

command: python manage.py runserver 0.0.0.0:8000

volumes:

- backend:/usr/src/app

ports:

- "8000:8000"

depends_on:

- db

environment:

- DEBUG=True

- DJANGO_DB_HOST=db

- DJANGO_DB_PORT=5432

- DJANGO_DB_NAME=django_api_db

- DJANGO_DB_USER=postgres

- DJANGO_DB_PASSWORD=123456!

- AWS_ACCESS_KEY_ID=${AWS_ACCESS_KEY_ID}

- AWS_SECRET_ACCESS_KEY=${AWS_SECRET_ACCESS_KEY}

networks:

- mynetwork

nginx:

restart: always

container_name: nginx

depends_on:

- api

ports:

- '80:80'

build:

context: ./nginx

networks:

- mynetwork

volumes:

backend:

pgdata:

networks:

mynetwork:The backend service uses the ECR_URL environment variable to retrieve the backend Docker image from ECR. The command specified in the backend service runs the python manage.py runserver command, which starts the Django RESTful API.

The nginx service builds the Docker image using the nginx directory in our repository as the build context. The nginx service also exposes port 80, which is the default HTTP port.

Install Docker on Amazon Linux 2

We need to ensure Docker and Docker Compose are installed and properly set up on our EC2 instance before running our run.sh script.

First, let’s use our SSH pem key to connect to our EC2 instance. Replace your-ec2-hostname with the EC2 instance’s public DNS or IP address.

ssh -i /path/to/your/private/key ec2-user@your-ec2-hostnameIf you have been promoted “Permission denied (publickey,gssapi-keyex,gssapi-with-mic).“, remember to run:

chmod 400 /path/to/your/private/keyNext, update the package list to ensure we have the latest information on available packages:

sudo yum update -yInstall Docker using yum:

sudo yum install -y dockerNext, install Docker Compose:

sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-composeNext, verify that Docker and Docker Compose are installed correctly:

docker --version

docker-compose --versionIf you have been prompted “Error loading Python lib ‘/tmp/_MEIHv6dpR/libpython3.7m.so.1.0’: dlopen: libcrypt.so.1: cannot open shared object file: No such file or directory”, run the command below:

sudo dnf install libxcrypt-compatAdd ec2-user to the Docker Group:

sudo usermod -a -G docker ec2-userStart the Docker service.

sudo service docker startConfigure Bitbucket Pipelines

Create a new file named bitbucket-pipelines.yml

image: atlassian/default-image:2

pipelines:

branches:

master:

- step:

name: Build and Push

services:

- docker

script:

# - echo "Logging in to AWS ECR..."

# - eval $(aws ecr get-login --region $AWS_REGION --no-include-email)

- echo "Building and pushing Docker images..."

- docker build -t $IMAGE_NAME ./backend

# use pipe to push the image to AWS ECR

- pipe: atlassian/aws-ecr-push-image:1.3.0

variables:

AWS_ACCESS_KEY_ID: $AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY: $AWS_SECRET_ACCESS_KEY

AWS_DEFAULT_REGION: $AWS_REGION

IMAGE_NAME: $IMAGE_NAME

TAGS: "$BITBUCKET_BUILD_NUMBER latest"

- echo "Copy file to build folder"

- mkdir build

- cp -R nginx/ build/

- cp docker-compose.yml build/

- cp run.sh build/

artifacts:

- build/**

- step:

name: Deploy artifacts using SCP to EC2

script:

- pipe: atlassian/scp-deploy:1.2.1

variables:

USER: ec2-user

SERVER: $EC2_HOSTNAME

REMOTE_PATH: /home/ec2-user

LOCAL_PATH: '${BITBUCKET_CLONE_DIR}/build/*'

SSH_KEY: $SSH_KEY

EXTRA_ARGS: '-r'

DEBUG: 'true'

- step:

name: SSH to EC2 to start docker

script:

- pipe: atlassian/ssh-run:0.4.2

variables:

SSH_USER: ec2-user

SERVER: $EC2_HOSTNAME

COMMAND: 'chmod +x /home/ec2-user/run.sh &&./run.sh'

SSH_KEY: $SSH_KEY

ENV_VARS: >-

AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY

ECR_URL=$ECR_URL

DEBUG: 'true'Finally, to activate the CI/CD pipeline, we simply push our code to the main branch. Bitbucket Pipelines will automatically detect the changes and execute the pipeline, which will build and deploy the Docker image to our EC2 instance.

Let’s try to add our EC2 IP to ALLOWED_HOSTS in our Django settings.py:

ALLOWED_HOSTS = ["X.X.X.X"]Push the code to the bitbucket master branch, and observe the pipelines:

Try accessing the Django API with endpoints HTTP://YOUR_EC2_IP/api

Conclusion

In this tutorial, we have successfully automated the deployment of a Dockerized application to an EC2 instance using Bitbucket Pipelines and Amazon ECR. This approach enables continuous integration and continuous deployment of containerized applications, ensuring faster and more reliable deployments. Full source code is available on GitHub.

Share this content:

Leave a Comment